Sentiment analysis using BERT models

Sentiment Analysis Prediction on Clothing Dataset

This project aims to fine-tune a BERT (Bidirectional Encoder Representations from Transformers) model for predicting sentiments from clothing reviews.

Common Types of BERT models used for NLP tasks

| Feature | BERT | RoBERTa | DistilBERT |

|---|---|---|---|

| Training Objectives | MLM (Masked Language Model), NSP | MLM (Masked Language Model) | MLM (Masked Language Model) |

| Data Preprocessing | Random Masking | Dynamic Masking, No NSP | Random Masking, Pruning Attention |

| Next Sentence Prediction (NSP) | Yes | No | No |

| Training Duration | Extended | Longer, Larger Dataset | Shorter, Pruned Layers |

| Sentence Embeddings | [CLS] Token | No [CLS] Token for Sentence Tasks | [CLS] Token |

| Batch Training | Fixed Batch Size | Dynamic Batch Size | Smaller Model Size |

| Model Size | Large | Larger | Smaller |

| Number of Layers | Configurable, Typically 12 or 24 | Configurable, Typically 12 or 24 | Reduced (Distilled), Typically 6 |

| Performance | Benchmark Model | Improved Performance on Tasks | Trade-Off between Size and Quality |

Project Goals

1. EDA (Exploratory Data Analysis):

• Perform data cleaning and exploratory data analysis (EDA) on the dataset to uncover insights from product reviews.2. Test different types of pretrained BERT models on the dataset:

• Test different types of BERT models from Hugging Face with varying output classes. This step involves experimenting with pretrained models to evaluate their performance on the dataset without fine-tuning.3. Decide on number of output classes and type of BERT model:

• Make a decision on the number of output classes (2 classes, 3 classes or 5 classes) and the type of BERT model to use (BERT, roBERTa or distilBERT) for the final sentiment analysis model.4. Fine-tune BERT model:

• Fine-tune the dataset after deciding on which type of BERT model to use and how many output classes for the final model.About the Dataset

The dataset used is about women's E-Commerce Clothing Reviews from Amazon. It is obtained from Kaggle. You can download the dataset from here.

NOTE: If you would like to fine-tune a BERT model based on your own dataset, the fine-tuning steps outlined in this project are applicable.

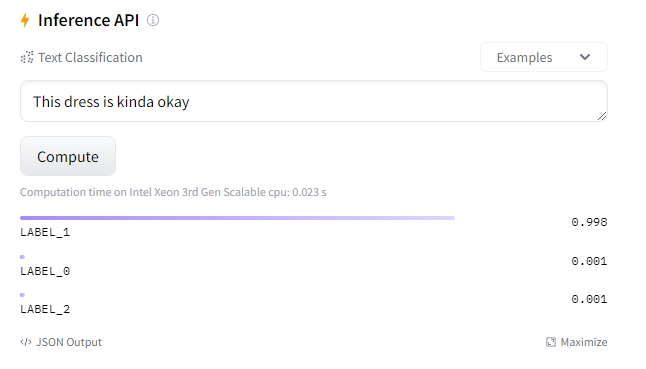

Pretrained BERT model's result:

| Model | Accuracy | Precision | Recall | F1 Score |

|---|---|---|---|---|

| pretrained BERT model (5 output classes) | 0.566 | 0.653 | 0.566 | 0.592 |

| pretrained roBERTa model (3 output classes) | 0.793 | 0.771 | 0.793 | 0.776 |

| pretrained distilBERT (2 output classes) | 0.837 | 0.850 | 0.837 | 0.842 |

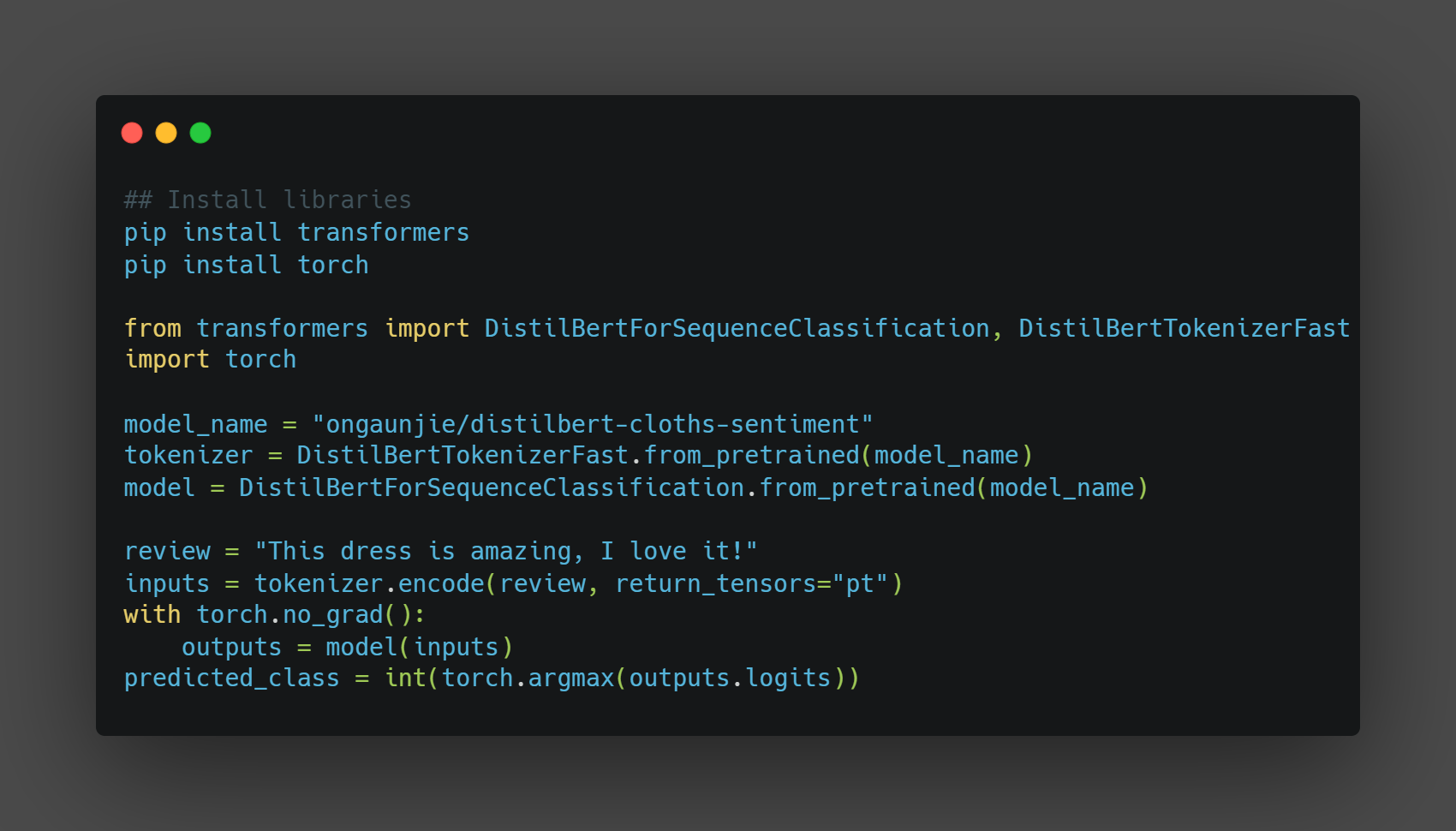

- pretrained BERT with 5 output classes (1 star to 5 star) - Link to the model

- pretrained roBERTa with 3 output classes (0 : Negative, 1 : Neutral, 2 : Positive) - Link to the model

- pretrained distilBERT with 2 output classes (0 : Negative, 1 : Positive) - Link to the model

Decision on how many output classes to use for fine-tuning

The chosen number of output classes for this project is 3 output classes:

(0: Negative, 1: Neutral, 2: Positive)

Decision on which type of BERT model to use for fine-tuning

Result comparison between fine-tuned distilBERT model and the pretrained models

| Model | Accuracy | Precision | Recall | F1 Score |

|---|---|---|---|---|

| pretrained roBERTa (3 classes) | 0.789 | 0.772 | 0.789 | 0.773 |

| pretrained distilBERT (2 classes) | 0.837 | 0.850 | 0.837 | 0.842 |

| Fine-tuned distilBERT model (3 classes) | 0.849 | 0.860 | 0.849 | 0.853 |

Check out my Github Repository for more info:

View on GithubChat Assistant

Hi! How can I help you today?